🕒 13 min read

Taming the Chaos of Generative AI

Anyone who has worked with generative Artificial Intelligence (AI) has experienced the paradox. One moment, the AI delivers a paragraph of astonishing brilliance, perfectly capturing the intended nuance and style. The next, it produces an output that is frustratingly mediocre, factually incorrect, or completely irrelevant to the task at hand. This inconsistency is the central challenge that prevents many creators, marketers, and organizations from fully harnessing the transformative potential of AI. It turns a promising tool into an unpredictable variable, making it difficult to rely on for consistent, high-quality content production.

The common reaction is to attribute this variability to the AI itself—to view it as an inherently flawed “black box.” However, extensive analysis suggests the root of the problem lies not with the technology, but with the lack of a systematic, human-led process for interacting with it. Most users resort to “ad-hoc, trial-and-error methods,” a reactive approach that leads to inconsistent results, wasted effort, and the potential propagation of low-quality or misaligned content. This creates a significant “signal versus noise” problem, leaving users navigating a chaotic sea of conflicting advice on how to achieve their goals. The issue is not a failure of the tool, but a failure of process.

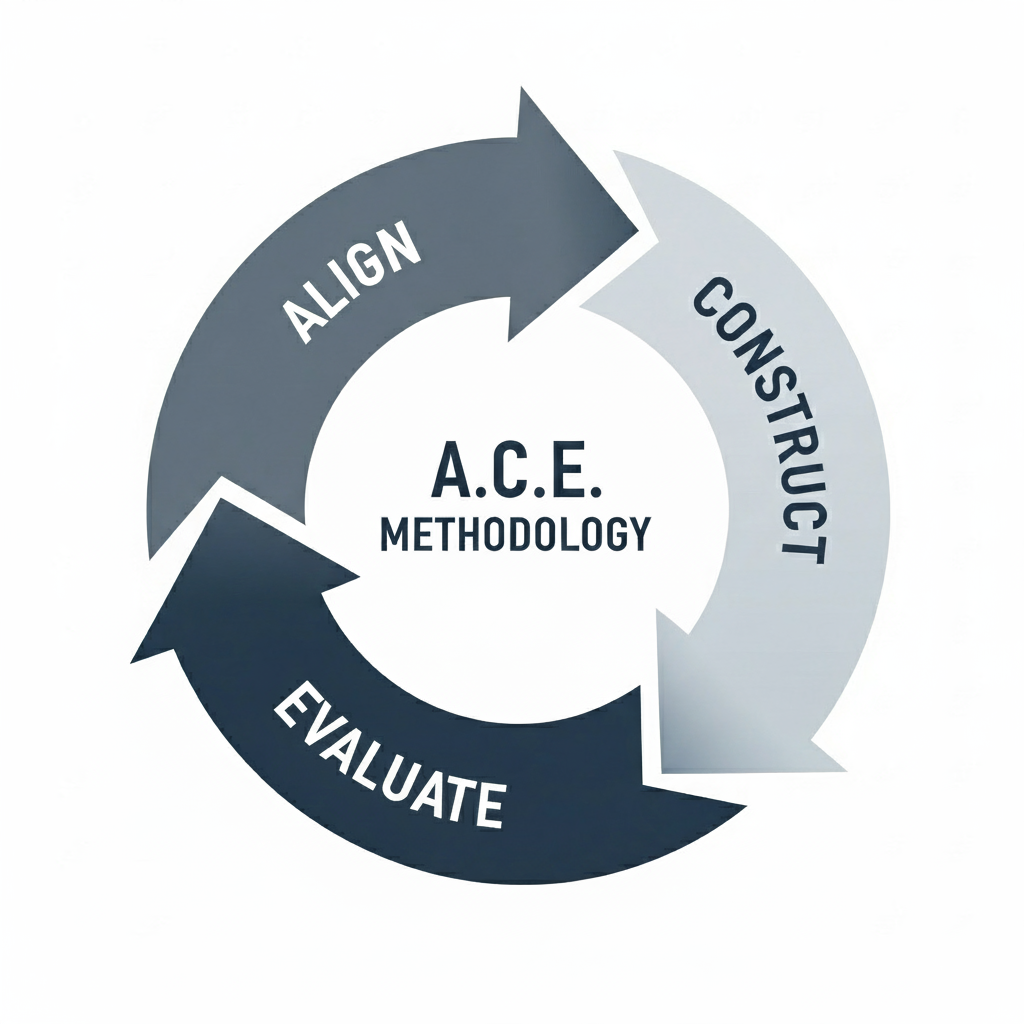

The solution is to move beyond guesswork and adopt a structured, repeatable framework designed to bridge the gap between human intent and AI execution. The A.C.E. (Align, Construct, Evaluate) Methodology provides this structure. It is a comprehensive framework that guides users through a systematic process for leveraging generative AI, transforming it from an unpredictable creative partner into a reliable and scalable content engine. More than just a collection of tips, A.C.E. functions as a strategic operating system for human-AI collaboration, providing a clear, logical workflow that ensures the final output is not only well-written but also purposeful, accurate, and aligned with specific objectives.

The Empirical Foundation: From Real-World Practice to a Robust Framework

To be truly effective, any methodology must be built on a foundation of evidence, not just theory. The A.C.E. framework is not an arbitrary construct; it is empirically grounded in a rigorous qualitative meta-analysis of how generative AI is actually being used in the real world. Its principles are derived directly from the systematic review, coding, and evaluation of over 100 publicly available YouTube tutorials demonstrating a wide array of techniques for AI-driven content creation.

This research approach was chosen specifically to ground the methodology in observed, practical application. By analyzing the methods taught by practitioners, the challenges they faced, and the strategies that led to successful outcomes, it was possible to identify clear patterns correlating specific approaches with higher-quality outputs. This process distilled the collective, implicit knowledge of thousands of users into a set of core principles. The A.C.E. methodology, therefore, represents a codification of crowd-sourced wisdom—a bottom-up framework built from what demonstrably works in practice.

Emergent Best Practices: The Building Blocks of Quality

Through the comparative analysis of these 100+ tutorials, several core best practices consistently emerged as the key determinants of success, differentiating high-quality outputs from ineffective ones. These principles form the foundation of the A.C.E. framework:

- Specificity & Detail: High-performing examples consistently involved providing the AI with clear, unambiguous, and detailed instructions. This included specifying the desired topic, scope, length, and key elements to include.

- Context Provision: Supplying relevant background information—such as the target audience, the purpose of the content, and the desired tone—was crucial for improving the relevance and appropriateness of the output.

- Persona Definition: Instructing the AI to adopt a specific role or perspective (e.g., “Act as a marketing expert”) proved highly effective in tailoring the output’s style, voice, and focus.

- Structure & Formatting: Requesting outputs in specific formats (e.g., outlines, bullet points, tables) or providing structured input helped guide the AI toward more organized and usable results.

- Iteration & Refinement: Successful content creation was rarely a single-shot effort. It often involved multi-step processes or explicit refinement loops, where the output from one step informed the next.

- Decomposition: Breaking down complex content creation tasks, like writing a long report, into smaller, more manageable sub-tasks was a common feature in the most effective approaches.

- Groundedness: For tasks requiring factual accuracy, ensuring the AI’s output was grounded in provided source material was critical to preventing hallucinations and errors.

A crucial finding from this analysis was that high performance rarely resulted from a single, isolated “trick.” Instead, success was consistently associated with the thoughtful combination and integration of multiple best practices. Low-performing examples, conversely, often suffered from the neglect of several of these key principles simultaneously. This directly refutes the popular notion of searching for a single “magic prompt” and highlights the need for a holistic, multi-phase framework like A.C.E., which guides users to apply these principles systematically and in concert. The methodology’s validity is further reinforced by the strong convergence between these empirically observed practices and the established principles of prompt engineering documented by experts at major AI research labs, providing powerful external validation.

Phase 1: Align – The Strategic Prerequisite for Success

The A.C.E. Methodology begins with the Align phase, the foundational stage of strategic planning that occurs before a single word of a prompt is written. This phase is dedicated to answering the critical questions that define the purpose and parameters of the content: What is the primary objective? Who is the target audience? What is the desired tone and style? What contextual information is essential for the AI to know?

Why Alignment is Non-Negotiable

The research analysis strongly indicates that the Align phase is not merely the first step but a critical prerequisite for the success of the entire content creation process. Inadequate alignment at the outset fundamentally undermines the potential effectiveness of the subsequent Construct and Evaluate phases. Many of the observed failures in the analyzed tutorials stemmed directly from a lack of upfront strategic clarity. For example, vague prompts like “Write 3 tweets about X” consistently produced low-quality, generic outputs. This wasn’t just a failure of prompt construction; it reflected an underlying lack of clarity regarding the purpose of the tweets, their target audience, or their desired impact—all elements that are addressed during the Align phase.

Without clear goals and context established during Alignment, it becomes nearly impossible to construct a truly effective prompt or to meaningfully evaluate whether the generated output meets the intended requirements. The time invested in the Align phase is the highest-leverage activity in the entire workflow. A small improvement in strategic clarity upfront prevents massive rework, frustrating re-prompting, and extensive editing later on. Skipping this phase creates a “productivity debt” that must be paid back with interest during refinement, negating the very efficiency that AI promises.

Actionable Guidance for the Align Phase

To effectively implement the Align phase, creators should develop a clear brief for the AI by addressing the following points:

- Define the Objective: Articulate a single, clear, and measurable goal for the content. Is it to inform and educate, persuade a user to take an action, or entertain? (e.g., “Explain the concept of Retrieval-Augmented Generation to a non-technical audience.”).

- Profile the Audience: Create a concise persona for the target reader. Consider their level of expertise, their needs, and their motivations (e.g., “A busy marketing manager who needs scannable, actionable takeaways.”).

- Specify Tone & Style: Move beyond generic terms like “professional.” Choose specific, descriptive adjectives that guide the AI’s voice (e.g., “authoritative yet approachable,” “technical and precise,” “inspirational and conversational.”).

- Gather Essential Context: List the key pieces of information, data, background knowledge, or source materials that the AI absolutely needs to know to complete the task accurately and effectively.

Phase 2: Construct – The Art and Science of AI Interaction

Once a clear strategic direction is established in the Align phase, the process moves to the Construct phase. This is the stage of practical execution, where the strategic brief is translated into effective instructions for the AI. It is best understood not merely as “prompt writing” but as a sophisticated process of interaction design—crafting a comprehensive set of instructions, providing structured data, and designing multi-step workflows to guide the AI toward the desired outcome. An effective prompt is less like a simple request and more like a well-written program; it defines variables (context), sets functions (tasks), and specifies the output format with precision.

The Four Pillars of Effective Prompt Construction

The research identified four core principles, or pillars, that should be systematically applied to construct robust and effective prompts. These pillars are not independent options to be chosen from a menu; they are an interdependent system where each element reinforces the others to create a powerful, unambiguous instruction set for the AI.

- Specificity: The prompt must contain a clear, unambiguous task definition with explicit constraints. Vague requests lead to generic outputs.

- Context: The prompt should include the comprehensive background information gathered during the Align phase, including details on the target audience and the purpose of the content.

- Persona: The prompt should instruct the AI to adopt an appropriate role or perspective. This is a powerful technique for controlling the output’s tone, style, and expertise level.

- Structure: The prompt must clearly define the desired organizational framework and formatting requirements for the output, such as requesting a bulleted list, a table, or specific section headings.

Advanced Construction Techniques

Beyond these foundational pillars, the analysis of high-performing tutorials revealed two advanced techniques that are particularly effective for complex tasks:

- Few-Shot Prompting: This involves providing the AI with 1-3 high-quality examples of the desired output directly within the prompt. This technique is exceptionally powerful for guiding the AI to adhere to a specific or nuanced style, tone, or format that is difficult to describe with words alone.

- Multi-Step Pipelines (Task Decomposition): For complex content like a long-form article or a detailed report, it is far more effective to break the process into a sequence of smaller, manageable prompts. For example, a pipeline might involve: Step 1: Generate a detailed outline. Step 2: Write the introduction based on the first point of the outline. Step 3: Write the next section, and so on. This iterative approach produces more coherent and well-structured results than a single, monolithic prompt.

The following table provides a clear, side-by-side comparison that illustrates the practical difference between a common, low-effort approach and the structured, high-impact A.C.E.-aligned approach.

| Table 1: From Theory to Practice: High-Performing Prompt Structures |

| Principle |

| Specificity |

| Persona |

| Structure |

| Few-Shot |

Phase 3: Evaluate – The Recursive Loop of Quality Assurance

The final phase of the methodology is Evaluate. This is the critical stage of assessment and refinement, where the AI’s output is systematically reviewed to ensure it meets the objectives defined in the Align phase and adheres to quality standards. This is not a simple “thumbs up or down” judgment but a structured quality assurance process that confirms the content’s fitness for purpose. This phase explicitly re-centers the human creator as the ultimate arbiter of quality. In an era of increasing automation, A.C.E. positions AI as a powerful assistant that produces drafts, while the human remains the strategist, editor, and final guardian of quality and accuracy.

A Multi-Dimensional Rubric for Assessment

To move beyond subjective impressions, the Evaluate phase encourages a structured assessment across several key dimensions of quality, adapted from the formal research rubric:

- Relevance & Adherence: Does the output directly address the prompt and fulfill all specified requirements? Does it align with the strategic goals established in the Align phase?

- Accuracy & Groundedness: Is the information factually correct? If source material was provided, is the output consistent with that source, avoiding unsupported claims or “hallucinations”?

- Fluency & Coherence: Is the text grammatically correct, well-written, and easy to read? Does it flow logically from one point to the next?

- Audience Appropriateness: Does the tone, style, vocabulary, and complexity level of the content match the defined target audience?

The Power of Recursion: Evaluation as a Diagnostic Tool

Perhaps the most crucial concept of this phase is that Evaluation is recursive. It is a diagnostic tool that often feeds insights back into the earlier phases of the process. A poor output should not be seen as a failure but as valuable feedback that reveals a flaw in the Align or Construct phase. This mindset transforms frustration into a process of continuous improvement, helping the user refine not just the content but their entire workflow.

This feedback loop is central to mastering AI interaction. For example:

- If the generated text has an inappropriate tone, the problem is not with the output itself. The root cause likely lies in an imprecise audience definition in the Align phase or a weak persona instruction in the Construct phase.

- If the output contains factual errors or inconsistencies, the issue may stem from a lack of grounding instructions in the Construct phase or the quality of the source documents provided during Align.

By diagnosing the root cause of a deficiency, the user can make targeted adjustments to their process. Refinement can be done with targeted prompts (e.g., “Rewrite this paragraph to be more persuasive for a marketing audience”), but significant issues often require looping back to adjust the initial strategy or prompt design, ensuring that each cycle produces a better result and a more skilled user.

Beyond “Tricks” to a Principled Workflow

To unlock the true potential of generative AI and move beyond the cycle of inconsistent results, creators must shift their approach from a reliance on isolated “prompt tricks” to the adoption of a systematic, principled workflow. The A.C.E. (Align, Construct, Evaluate) Methodology provides this exact structure.

As the evidence from the analysis of over 100 real-world applications demonstrates, A.C.E. is a robust, evidence-based framework that offers a reliable process for achieving consistent, high-quality, and goal-aligned content. It is not a theoretical construct but a practical synthesis of techniques observed to be effective in the field, validated by established principles within AI research. By emphasizing strategic planning before generation (Align), promoting the integrated application of best practices during interaction (Construct), and mandating critical assessment and recursive refinement (Evaluate), the methodology transforms an unpredictable creative tool into a dependable strategic asset.

By adopting the A.C.E. framework, individuals and organizations can take control of their AI interactions. It empowers creators to move beyond frustration and wasted effort, enabling them to harness the power of generative AI in a more deliberate, effective, and quality-assured manner, consistently producing content that meets its strategic objectives.

For a deeper dive into the empirical data and the full academic validation of the A.C.E. framework, the complete research paper is available for review on ResearchGate.